Our Approach: Agile Working

What's the benefit of agile working and continuous iteration if you're a blue chip? Get close to your end user, and constantly bring them value!

Discover five key lessons from implementing OpenTelemetry in micro services.

Here are five lessons learned along the way:

Initially, we wanted to implement a shared logging schema across our services. We considered the Elastic Common Schema and Semantic Conventions. Elastic has donated the Elastic Common Schema to the OTel project to create one standard open schema.

Semantic Conventions are great once you know how to use them, but initially, the documentation can feel overwhelming. OTel gives you lots of choices in how you use it. It is beneficial to look at some of the example projects and read through the specifications in the GitHub repo (I found this more straightforward than going through the documentation on the website).

My current experience is using GrafanaCloud and OpenSearch. Both tools support log aggregations and Application Performance Monitoring (APM). The APM and tracing features function more smoothly in Grafana Cloud. Additionally, Grafana Cloud offers native support for the OpenTelemetry Protocol (OTLP). Grafana Cloud bills on usage, and in OpenSearch, you are charged based on a fixed compute cost.

The OTel Collector is a spaghetti junction for your telemetry data, streamlined through easy-to-use YAML configuration. It can scrape Prometheus endpoints, format log files, and receive data in various schemas. Additionally, it provides debugging tools with features like zPages. Exploring its full capabilities is highly encouraged, as many more functionalities are available.

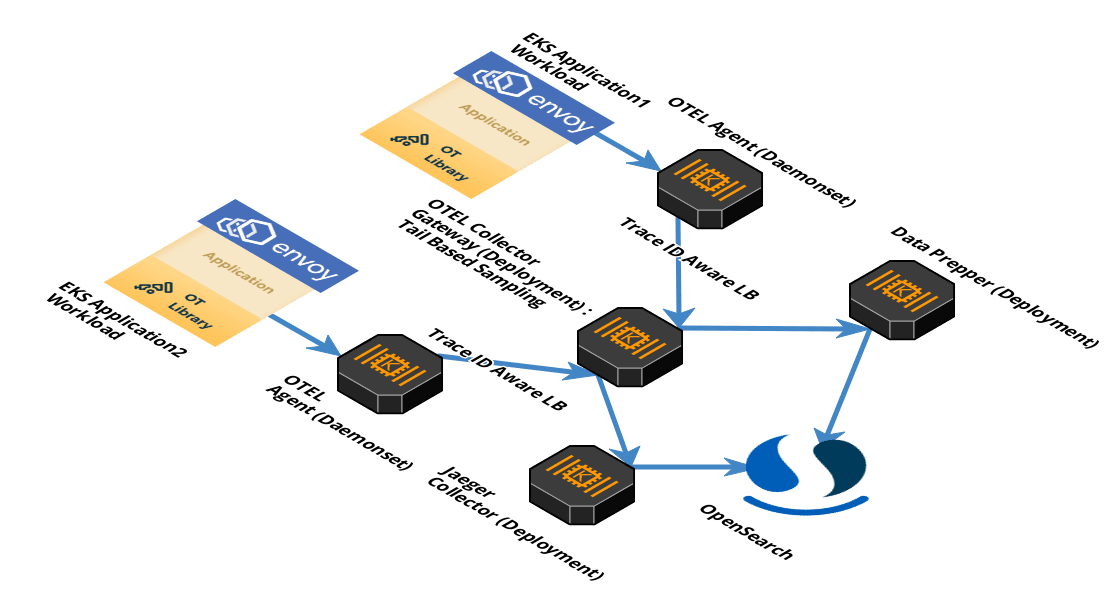

It is not always clear what the hurdles will be before implementation. An example of an unexpected challenge that came up in the implementation phase is that the observability backend we used, OpenSearch, does not directly ingest telemetry data formatted in Semantic Convention schema; it requires conversion to the Simple Schema for Observability (SS4O) that is part of the OpenSearch project. OpenSearch offers tools like Data Prepper to facilitate the ingestion of OpenTelemetry data into SS4O format. However, using tools to convert between schemas clashes with the OTel portability principle. In the future, I will prioritise backends that natively support Semantic Convention ingestion over OTLP.

There are various methods to deploy OpenTelemetry. We chose to use agents that export logs directly from our application via OTLP. This approach eliminates the need for an intermediary, is portable due to minimal supporting infrastructure requirements, and is well-suited for cloud functions. Additionally, it provides greater control over the structure of our telemetry data since we are exporting directly from the source.

However, integrating agents into the applications adds cognitive load for engineers, as they are responsible for installing and maintaining the code. I recently realised that one of the principles of the twelve-factor app states, "a twelve-factor app never concerns itself with routing or storage of its output stream." In hindsight, using a pull approach—where logs are written to stdout and collected by external agents—may be more advantageous. This method reduces the burden on engineering teams and accelerates the process of collecting logs.

I will use OTel again and follow the project closely. In the future, I hope that more observability backends support OTLP because integrating with OpenSearch was not as seamless as I hoped. OTel helped the team achieve a unified approach to application observability using vendor-agnostic tooling to capture logs, traces, and metrics.

What's the benefit of agile working and continuous iteration if you're a blue chip? Get close to your end user, and constantly bring them value!

Digital product companies are twice as likely to outperform their competitors and achieve 48% higher revenues.

Moving at pace is critical to navigating the latest period of disruption. Learn how enterprise are creating great digital products in 100 days.

Add a Comment: